One of the most widely used measures to monitor the reliability of equipment is MTBF (Mean Time Between Failures). From creating maintenance plans to justifying capital expenditures, it is utilized everywhere. The issue is that it’s simple to get MTBF inaccurate. Inaccurate calculations can also give the impression that your equipment is far more dependable than it is. In this article, we will review the most common MTBF calculation errors that teams often make, often without realizing it. This guide will help you identify the blind spots and prevent being misled by inaccurate data, regardless of your field, maintenance, operations, or engineering.

First, What Is MTBF?

Before we get into the mistakes, let’s make sure we’re on the same page about what MTBF actually means.

MTBF stands for Mean Time Between Failures. It’s a way to estimate how long a machine will run before it breaks down. So if a piece of equipment has an MTBF of 1,000 hours, that means it should run for about that long, on average, before something goes wrong.

Now, that “on average” part is important. MTBF isn’t a prediction of exactly when a failure will happen. It’s just the average time between breakdowns based on past data. Some machines might last much longer. Others might fail much sooner. MTBF smooths all of that into one number.

It also assumes that after each failure, the machine is repaired properly and returned to full working condition. If it’s not, the MTBF won’t tell you much.

At first glance, MTBF sounds simple. But in the real world, it’s easy to get wrong—especially if the data isn’t solid or the definition of “failure” isn’t clear. That’s where most of the trouble starts.

1. Counting Only the “Big” Failures

A lot of teams only count major breakdowns when calculating MTBF. They skip over smaller issues like sensor glitches, slowdowns, or minor faults that don’t shut the machine down completely. These might seem too small to matter, but they still cause downtime and often point to bigger problems beneath the surface.

When teams only log the big failures and ignore the rest, it leads to MTBF calculation errors. The data ends up giving a false sense of reliability because it’s based on incomplete information.

To avoid this, count every failure that interrupts normal operation. Even the small ones matter if they affect performance or require attention.

2. Using a Small Data Set

It’s easy to overestimate reliability when you’re working with limited data. This happens a lot with new equipment.

Let’s say your team installs a new pump. It runs for a full month without any issues. You log 720 hours of uptime and no failures, so the MTBF looks great on paper.

But one month isn’t enough to tell you much. The pump is new. It hasn’t had time to wear down or show long-term issues. Early data almost always looks better than reality because nothing’s aged yet and nothing’s gone wrong.

This is a common MTBF calculation error. Short-term numbers give you a false sense of reliability and lead to decisions based on incomplete information.

To avoid this, wait until the machine has gone through a few failure cycles before you start relying on MTBF. The longer you track real performance, the more accurate your numbers will be. This matters even more when you’re trying to build a component MTBF prediction model. If the data set is too small or too early in the equipment’s life, you’re not forecasting anything; you’re guessing. A strong prediction depends on consistent tracking over time.

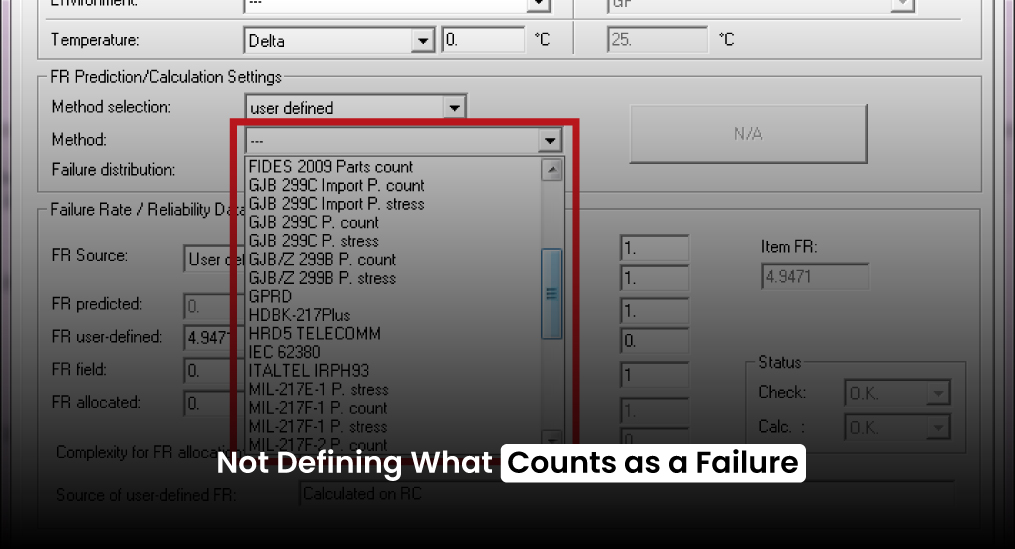

3. Not Defining What Counts as a Failure

Most problems don’t start with a full shutdown. They start small. A reading looks off. A part slows down. Something feels just slightly out of sync. But since the machine is still running, no one calls it a failure.

That’s where it begins.

Then it happens again. Maybe more often. Maybe in a slightly different way. It becomes a pattern, but it still doesn’t get logged. Because in many places, a failure only gets reported when the machine completely stops. Until then, it’s “just a glitch.”

Eventually, the machine does stop. And now, suddenly, it counts.

By that point, though, you’ve already missed the signs that led up to it. None of the smaller issues made it into the system. The data shows one big failure and nothing before it.

This is how MTBF calculation errors happen. When teams don’t agree on what actually counts as a failure, the numbers don’t reflect what’s really happening. Some teams only log hard stops. Others might include performance drops or recurring issues. Without a shared definition, you end up with data that’s all over the place.

Over time, this leads to more MTBF calculation errors. Machines look more reliable than they are, not because they’re performing better, but because a lot of the problems never got counted in the first place.

The fix is simple, but it takes consistency. Define what a failure means across the board. If something stops the machine from doing its job, even if it’s still technically running, it counts. Everyone should track it the same way. That’s how you get MTBF data you can actually use.

4. MTBF Calculation Errors from Ignoring Human Error

Sometimes, a machine fails because someone made an error. Maybe an operator pressed the wrong button. A maintenance technician may have installed a part incorrectly or missed a step during a routine check. These things happen. That’s part of working with machines.

But here’s where it goes wrong: many teams don’t count these failures when they calculate MTBF. The thinking is usually, “It’s not the machine’s fault, so it shouldn’t count.” On the surface, that sounds fair. But it leaves out something important.

If a machine can’t handle the way it’s being used, including small mistakes people are likely to make, then it’s not as reliable as it looks. A system that breaks down because someone didn’t follow the manual exactly isn’t built for the environments in which most teams work. That’s not a people problem. That’s a design or process issue.

This kind of thinking leads to MTBF calculation errors. When we ignore failures caused by human error, we’re not being more accurate; we’re just avoiding uncomfortable truths. We end up reporting reliability numbers that only hold up in perfect conditions, not in the way things run day to day. That gap between ideal scenarios and what happens on the floor is what throws off real-world MTBF accuracy. If your data doesn’t account for how machines are used, including the occasional human mistake, your reliability numbers aren’t grounded in reality.

If the same kind of mistake happens more than once, that should be part of the data. It identifies the weak points, not just in the machine, but in the system surrounding it. And that’s precisely what MTBF is supposed to help you understand.

5. Confusing MTBF with Useful Life

A machine can have a high MTBF and still be unreliable over time. Sounds odd, right?

Here’s how it happens: let’s say a motor runs for 2,000 hours between failures. You think that’s great. But if each failure costs 3 days of production and $10,000 in repair, the high MTBF doesn’t mean much. Or maybe the motor starts failing more often after year 3, even though the early MTBF looked solid. That’s why it’s essential to consider the MTBF lifecycle cost, not just how long the machine runs between failures, but what each failure costs you over time. A machine that fails less often but is expensive to repair might end up costing more than one with a lower MTBF but easier fixes.

This is a subtle MTBF calculation error—confusing long intervals between failures with long-term reliability.

What to do instead: Combine MTBF with failure impact, repair cost, and machine age. MTBF is just one piece of the reliability puzzle.

When MTBF calculation errors sneak into your data, you start making the wrong decisions. You might delay preventive maintenance because you think your machines are fine. You might cut back on spare parts inventory and get caught off guard when failures spike. Anybody might report inflated reliability numbers to leadership, only to lose trust when the machines can’t back it up.

Bad MTBF doesn’t just hurt the maintenance team. It ripples out across operations, finance, and customer delivery.

MTBF is useful, but only if it’s real. It’s tempting to fudge the data to make machines look better. Or to overlook mistakes because “that was just one weird case.” But overestimating reliability sets you up for failure—literally.

Avoiding MTBF calculation errors doesn’t require a PhD. It just takes discipline, consistent definitions, and a willingness to look at the whole picture—even if the picture isn’t pretty.